The See-Stage

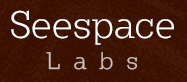

I’ve been working on the See-Stage since 2011, and demoed it at two openings of the Bemis Art Show. It is three huge projection screens that show animations which react to an audience. Six ceiling-mounted Kinect cameras point down and track where audience members are located.

I call it a “stage”, because the audience is meant to be part of the performance, literally on the stage.

Screens

The screens are my own design–the result of endless adjustments and do-overs. I confess, I’m a little proud of them. If you want to know, I can tell you a lot of bad ways I’ve learned to make screens too.

Each screen is 12 feet wide by 8 feet tall and raised at a height where the eye line of a tall standing person will hit about center. The screens are free standing and light, designed with portability in mind. Each screen breaks apart into three sections, so I can fit it into a large van or moving truck to take to another venue. The screens have been designed with safety in mind, built from flame-resistant materials, and include ceiling securing lines as an extra assurance that they won’t fall on someone.

Projectors

Projectors

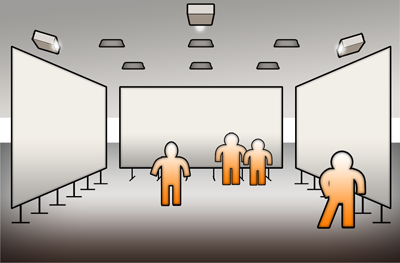

The three See-Stage projectors are Optoma HD23s (well, one is an HD20). I chose this model as a good tradeoff between cost and performance. I don’t claim they are the absolute best or anything. But they definitely work well for what I’m doing. Each projector has HD-quality 1080p output. AV geeks sometimes describe these things as “light cannons,” for their unexpected brightness.

The Bemis Building studio, where the See-Stage is currently residing, has a 12-foot ceiling. This height provides a great angle for the projectors since it allows the light to hit the screens with little obstruction from people walking underneath. If an audience member gets within five feet of a screen, you can just start to see the shadow silhouette of her head on the bottom of the screen. In my opinion, that just amounts to a minor distraction. The angle needs keystone correction to allow the projection to be square on the screen. But since the 12-foot mounting point does not cause too extreme of a downward angle, it’s virtually impossible to see a difference in image quality as compared to a level projection.

Windows in the room are opaquely covered to minimize ambient light during the day. Although it’s impossible to cut down all the projector light that bounces off the screens (they are light cannons, you know!), the result is a crisp, enjoyable image on all three screens.

Kinects

Kinects

The Kinects are the real magic of the stage. They are what allows the audience to become participants.

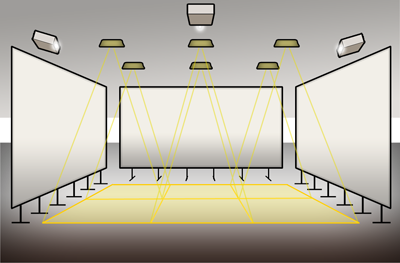

I’ve taken the unusual approach of mounting the Kinects to the ceiling and pointing them straight down. They weren’t designed to be used this way, but it works fantastic. The software out there that exists for making sense of depth data always assumes the Kinect will be pointed at an angle parallel to the floor. So I had to write my own software that analyzes raw depth data from the Kinect cameras and picks out people’s heads and shoulders. The beauty of topdown is that you can easily spot one, two, or twenty people moving around beneath the cameras. A normal Kinect setup like people have in their living rooms will get confused by a crowd. This is probably why when you play XBox games, you are limited to two people at a time.

In the Kinect programming community, there is a well-known problem of degraded depth data when two or more Kinects overlap an area. Basically, each camera sends its own signals out into the world that help mark out how far surfaces are away from the camera. But if you have more than one camera doing this, they will confuse each other when the symbols are projected onto the same surface. My design solves this problem in two ways. 1. Cameras are mounted in a way that limits overlap. 2. My shape recognition algorithm is written to perform well with noisy data from the places where overlap still exists.

Over the last year, I’ve had to solve numerous technical problems to get multiple Kinects to feed their data into a single coherent model that can be used by the animation software to run programs. And I’m sure I will solve plenty more. At the last Bemis Art Show, I had a couple of cameras mounted. I’ll be increasing it to six with the next See-Stage build. This allows for a a very large area of coverage, about 20 feet by 20 feet, with comfortable room for about 15 people on the stage. The architecture I’ve designed is meant to be scalable for up to at least 48 simultaneous Kinects, covering giant warehouse-sized spaces.

See-Stage as a Product

People have asked if I’m trying to make a product out of See-Stage. I’ve definitely considered this. But See-Stage is going to stay as a prototype for a while. Working on the animated programs that use See-Stage will be more helpful as a way to move it towards real-world reliability. I want to test out the system with lots of participants, so that I end up with more confidence and knowledge about what it can do. And I will have a way to show people how this type of multimedia display can be extremely entertaining and valuable–not just a “look what I can do” gimmick.

Plus, it’s going to be tremendous fun to put talking robots in front of people and watch their reaction.