Wide-area motion tracking (or “wide-area motion capture”) is detecting the positions and movements of objects over a wide area.

That was a pretty general and useless definition. Now let me give you the 2012 Practical Definition of the term: using more than one Kinect to track people inside of an area. “Kinects”, because that is the cheap-ass consumer device that developers are using these days. “People” because what else do you want to track? Iguanas? (Arguably, robots.) And “more than one Kinect” because it’s a slightly difficult problem to solve which ends up being the threshold for what is a “wide” versus “normal” area. An enthusiastic 9-year-old can figure how to track the area covered by just one Kinect by ripping open the green-and-purple box, plugging the doodad in, and following the gentle advice of the XBox. The problem of tracking the area of two or more Kinects has been beaten by university students, weekend hackers, and various technocrats. It’s not trivial, and standard hardware/software solutions are as yet unavailable.

Problem #1: Don’t Everybody Infra-Red Talk at Once

With multiple Kinects, the first obstacle you run into is the degradation of signal in the overlap of two tracking areas. Kinects project invisible-to-humans, infra-red symbols with depth information onto the tracking area. And then they read it back with an infra-red camera. So if you have two Kinects splashing these symbols over the same area, it’s like reading a piece of paper that has been run through the printer twice with two different messages inked to the paper. Things get garbled. Thankfully, neither Kinect gives up easily on reading the overlapped symbols and both will manage to have some limited success. Will enough depth data be retrievable? It depends on the application. The easiest way to set your expectations is that the resolution of tracked objects will be decreased. With a non-overlapping Kinect, you can track movements of individual arms and legs. With overlapped Kinects, you might settle for tracking just the body.

There are some clever alleviations for the overlap problem.

One trick puts a vibrating motor on each Kinect. And that’s it. How does it work? Since both the infra-red transmitter and camera are more or less stationery relative to each other, the projected symbols tend to be sharp and unblurred to the corresponding camera, but not to a camera that is getting jiggled on a different timing. It’s a genius hack, but personally, I would be afraid to count on it as a robust solution. I suspect that the motors can occasionally get in sync with each other and generate nasty intermittent blindness. I don’t know this, but I really hate debugging intermittent problems caused by hacks, so I’ll leave that box of chocolates for someone else.

And there are some other tricks too, like polarizing light or using carefully timed LCD shutters. None of it is supported by off-the-shelf hardware at the time I write this.

Problem #2: You’re a Door Not a Window

If you’ve got a large area to cover, you’ll likely have more people and things inside of it. And the chance goes up that some object is hiding other information that’s behind it. Picture big man Penn Jillette standing close to the camera completely obscuring the sleight Teller who would vanish from view in the World’s Laziest Magic Trick. You can alleviate this with Kinects positioned to cover the same area redundantly from different angles, presumably increasing the chance that one of those angles will be clear. But this will worsen problem #1 with all the symbol spew overlapping.

A better way for most situations, particularly a crowd of people standing in a room, is to use ceiling-mounted Kinects that point straight down. Disadvantages of this approach include getting less coverage per device, and being unable to use large amounts of preexisting code which assume a horizontally projected tracking area.

Problem #3: USB Won’t Scale

Kinects have USB connectors. This is great for plugging a couple of them into a computer, but you run into problems with three or more. I believe it is possible on some platforms now with USB 3.0 to get six or more Kinects plugged into one device, but driver support on Linux seems to be problematic. I think people are aware of the problems and fixing them, so eventually the max number of devices that can plug into one computer should be pretty high. But then you have to worry about the maximum practical length of USB cables, which is maybe 20 feet before signal degradation rears its unattractive head. Off-the-shelf USB range extenders I’ve tried have failed, I believe because the Kinect sends power through the USB cable.

A solution is to convert the data to network traffic and then it can be shuttled to a central server using ordinary TCP/IP networking. Ah, but can you deal with the massive amount of data generated by the Kinect? 30 frames per second, 640 x 480 resolution, 11 or 16 bits per pixels. Double that if you are sending camera data along with depth data. And multiply that number by each Kinect in the system. So that is a firehose. The networking hardware (switches, routers, cables, etc.) will be fine with ordinary 100 Mbits/s equipment. But the CPU processing at the sending and receiving side can get overloaded pretty quick. And I mean just at the network stack level. If you hit the CPU wall, you would need to either compress the data before sending or do application-specific processing to send just what info is needed over the network. Since this is being done to fix a CPU bottleneck, the processing must be very simple or efficient to have a net gain in throughput. For example, quantizing the depth data and run-length compressing the results is cheap enough to fix the bottleneck on Intel Atom-based system.

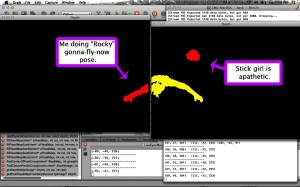

Problem #4: Combining Realities that Disagree

Each Kinect has its own view of the world. Making sense of a wide area means you’ll need to take all those views and combine them into a single model of the world. Disagreements will invariably crop up. In the overlap areas, two or more Kinects will report one object from slightly different views. They will need to be combined into one object in the model. If the relative physical relationships of the Kinects are accurately specified, it helps combine the data. The more difficult issues are time-related. If someone is moving rapidly across the tracking areas of two Kinects, the Kinect of the first tracking area may report the same person at the same time as the Kinect of the second tracking area. This is the same kind of artifact you get with overexposed film–the same object appears two places at once. It’s important to keep the frame rate high and dropped frames low. In addition, the central model server needs to have rules for dropping information if it’s too old.

With a little knowledge and control of the physical area, you can mark certain areas as places where people/objects can not “appear” and “disappear” and then force the software to make an enlightened guess where tracked objects are based on the depth data. Consider a room that is fully covered by Kinects and has just one entrance. You would expect for people to appear and disappear near the entrance, but nowhere else in the room. Say the depth data shows a blob in one corner of the room that might be one big person or two small people. The software can look at the tracking history and see that there was only one person there before and it would not have been possible for a second person to move into that corner.

In Conclusion

I think when a technology area is very new, the most meaningful explanation of it will include its problems. Because that tells you what you can do and how hard it’s going to be. I’ve given you what problems I’ve ran into over the last year. Soon more people are going to be using wide area motion tracking, and a lot of these problems will go away or become solvable with standard software and hardware. If you read this and disagree or think the info has gone out of date, please leave a comment. I may update or post new articles based on what I learn from you.